As the need for intelligent applications expands, developers are increasingly relying on artificial intelligence (AI) and machine learning (ML) to enrich their application’s capabilities. Amongst the numerous conversational AI delivery methods, chatbots have become highly sought after. OpenAI’s ChatGPT, a robust large language model (LLM), is an excellent solution for creating chatbots that can comprehend natural language and provide intelligent replies. ChatGPT has gained popularity since its launch in November 2022.

In this blog post, myself and a colleague, Sandeep Nair, walk through our experience in learning the Large Language Models that power OpenAI’s ChatGPT service and API by creating a Cosmos DB + ChatGPT sample application that mimics its functionality, albeit to a lesser extent. Our sample combines ChatGPT and Azure Cosmos DB which is used to persist all of the data for this sample application. Throughout this blog post, as we go through the sample we built, we’ll also explore other ways combining a database like Azure Cosmos DB can enhance the experience for users when building intelligent applications. As you’ll come to see, combining Azure Cosmos DB with ChatGPT provided benefits in ways that turned out to be more valuable than we anticipated.

To run this sample application, you need to have access to Azure OpenAI Service. To get access within your Azure Subscription, apply here for Azure OpenAI Service.

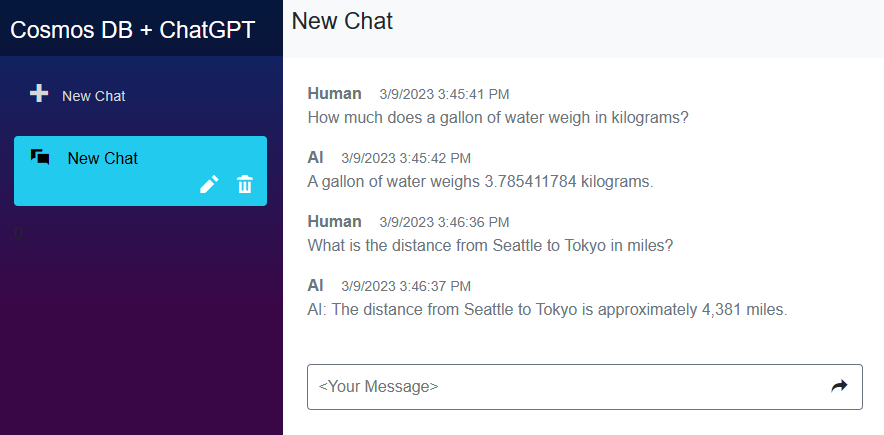

Let’s get to the application. Our application looks to show some of the functionality for the ChatGPT service that people are familiar with. Down the left-hand side are a list of conversations or “chat sessions”. You click on each of these for a different chat session. You can also rename or delete them. Within each chat session are “chat messages”. Each is identified by a “sender” as Human or AI. The messages are listed in ascending chronological order with a UTC timestamp. A text box at the bottom is used to type in a new prompt to add to the session.

Before getting too far here, some definitions. When interacting with a Large Language Model (LLM) service like ChatGPT, you would typically ask a question and the service gives you an answer. To put these into the vernacular, the user supplies a “prompt”, and the service provides a “completion”. For our sample here we used the text-davinci-003 model. The current ChatGPT is based upon a newer model, gpt-35-turbo.

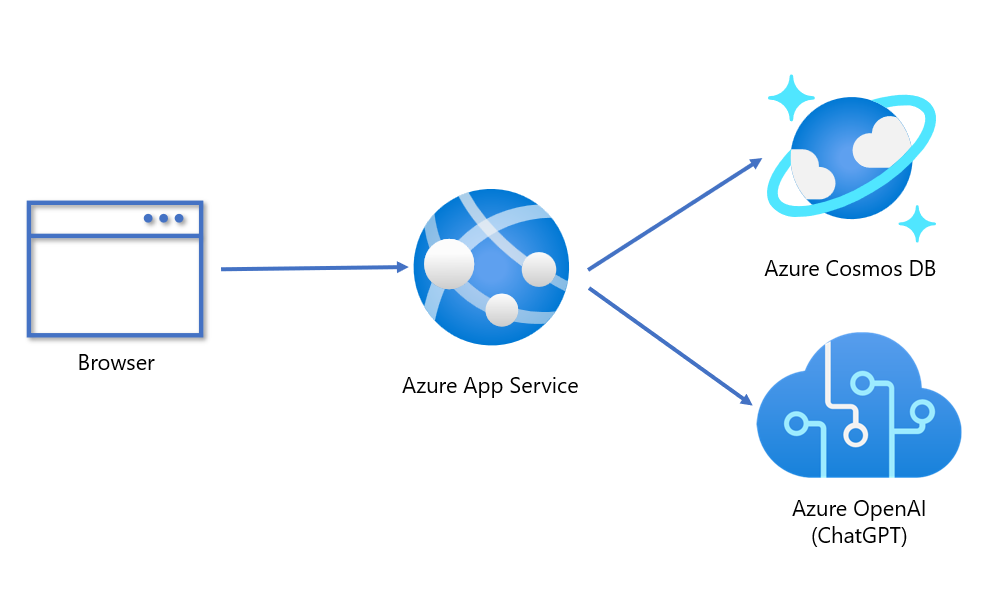

Below is the architecture for our sample. The front-end is a Blazor web application hosted in Azure App Service. This connects to Azure Cosmos DB as the database and the Azure OpenAI service which hosts the ChatGPT model. To make it as easy as possible to deploy our sample application, look for the “Deploy to Azure” button in the readme file for our sample on GitHub. The ARM template will handle all the connection information, so you don’t have to copy and paste keys. It’s completely zero-touch deployment.

Here is the data model for our application. Very simple with only two classes, chat session and chat message. Everything is stored in a single container.

If you’ve used ChatGPT at chat.openai.com you may have noticed that in addition to just answering single prompts, you can have a conversation with it as well. ChatGPT gives you an answer, you ask a follow-up without any additional context and ChatGPT responds in a contextually correct way as if you were having a conversation with it.

When designing our sample application using the underlying text-davinci-003 LLM, my initial assumption was this LLM has some clever way of preserving user context between completions and this was in some way part of the LLM available on Azure. I wanted to see this in action, so I tested the service’s API with prompts I had typed in. However, the service couldn’t remember anything. The completions were completely disjointed.

Let me show you. In this chat below I asked what the seating capacity is for Lumen Field in Seattle. However, in my follow-up, “Is Dodger Stadium bigger?”, it gives a response that is contextually incorrect and factually meaningless. It looks as though our app either is having a case of short-term memory loss or is responding to someone else asking a similar question.

What exactly is happening here? Well, it turns out my assumption was not correct. The underlying text-davinci-003 LLM does not have some clever mechanism for maintaining context to enable a conversation. That is something YOU need to provide so that the LLM can respond appropriately.

One way to do this is to send the previous prompts and completions back to the service with the latest prompt appended for it to respond to. With the full history of the conversation, it now has the information necessary to respond with a contextually and factually correct response. It can now infer what we mean when we ask, “Is that bigger than Dodger Stadium?”.

Let’s look at the same chat when I send the previous prompts and completions in my follow-up.

Clearly the response is now contextually aligned with the rest of the conversation. The sample application is now able to mimic ChatGPT’s conversational experience for users.

While providing a history of a chat is a simple solution, there are some limitations to this. These Large Language Models are limited in how much text you can send in a request. This is gated by “tokens”. Tokens are a form of compute currency and can vary in value from one character to one word in length. They are allocated by the service on a per-request basis for a deployed model. Also, the maximum amounts allowed vary across models. Currently for the “text-davinci-003” this sample is based on, the maximum number of tokens per request is 4000. In the sample we built, we tested various values for tokens. Here are some things to consider when building an app like this.

First, tokens are consumed in both the request and the response. If you use 4000 tokens sending a complete history in the HTTP request, you won’t get anything back in the response because your tokens were all consumed processing all the text you sent in the request.

Second, setting the token limit aside, sending large payloads of text on every request is just not something you really want to do. It’s expensive on the client, consumes lots of bandwidth, and increases overall latency.

To deal with these practical considerations, we limited the amount of memory by sending only the most recent prompts and completions for that conversation. This would allow it to mostly respond with the context it needed to carry on a conversation. However, if a conversation drifts significantly over time, context increasingly would be lost and follow-up questions to older prompts might result in contextually incorrect responses again. Still, this works good enough.

How we implemented this was quite simple. First, we set a maximum length for a conversation sent to the service. We first started by setting a maximum number of tokens a request could use. We set this to 3000 to be conservative. We then calculated the maxConversationLength to be half of the maximum token value set.

When the request to our app is made, the length of the entire conversation is compared to the maxConversationLength. If it is larger, that value is used in a substring function to strip off the oldest bytes, leaving only the most recent prompts and responses in the conversation.

There is another approach for maintaining conversational context which I’ve seen others suggest and you might consider. You can maintain context for a conversation by first asking the LLM to summarize the conversation, then use that instead of sending the full or partial history of the conversation in each request. The benefit of course is drastically reducing the amount of text needed to maintain that context.

There are however some questions I have about this technique that I did not get around to fully answering. For instance, how often should I refresh that summary? How would I do subsequent refreshes that maintain fidelity? Can I maintain fidelity over a long conversation with just limited bytes to store? After pondering these and other questions, we decided that, at least for now, this wasn’t worth the effort. Our sample application’s implementation was good enough for most use cases. We’ll come back later and try this.

However, we did end up using this approach, but for something different. If you’ve used ChatGPT recently, you may notice it renames the chat with a summary of what you asked. We decided to do the same thing and asked our app to summarize the chat session on what the first prompt is. Here is how we did it.

We tried a bunch of different prompts here as well. But it turns out that just telling it what you want and why works best.

It turns out that what I’ve described in giving an LLM a memory is part of a larger concept around prompts that provide the model with greater context needed to interact with users. There are different types of prompts as well. In addition to user prompts that are used to provide context for a conversation, you can use starting, or system prompts to instruct a chat model to behave a certain way. This can include lots of things from whether to be, “friendly and helpful” or “factual and concise” or even, “playful and snarky”.

This process of writing a sample application gave me lots to think about. Our intention was to create a simple intelligent chat application that had some ChatGPT features using Cosmos DB to store chat messages. But it got me starting to think about how else I could combine a database like Azure Cosmos DB with a large language model like text-davinvi-003. It didn’t take me long to think of various scenarios.

For instance, if I was building a chatbot retail experience, I could use starting prompts to load user profile information for a user’s web session. This could include information on product or other recommendations. “Hi Mark, your mom’s birthday is next month, would you like to see some gift ideas?” There are limitless possibilities.

Prompts are great at providing information and arming a chat bot with some simple information. However, there’s a limit to how much data you can feed into a prompt. What if you wanted to build an intelligent chat that had access to data for millions of customers or product recommendations or other data points? You can’t cram GB of data into a prompt. For this scenario you need to customize the model through fine-tuning to train the model at much greater scale and depth. Not only does this result in better responses, but it saves in tokens and reduces latency from smaller request payloads.

All this starts with the data in your database!

We’ve shown here what you can do with just a simple example, we’re going to look further. Expect more from us in this space with additional samples and blog posts detailing what we’re learning as we embark on this journey to enable users to create intelligent applications and services using Azure OpenAI and Azure Cosmos DB.

- Download and explore our Azure Cosmos DB + ChatGPT Sample App on GitHub

- Get your Free Azure Cosmos DB Trial

- Open AI Platform documentation

Post Disclaimer

The information provided in our posts or blogs are for educational and informative purposes only. We do not guarantee the accuracy, completeness or suitability of the information. We do not provide financial or investment advice. Readers should always seek professional advice before making any financial or investment decisions based on the information provided in our content. We will not be held responsible for any losses, damages or consequences that may arise from relying on the information provided in our content.