Cloud operations shift from dashboards to self-optimizing, financially aware, carbon-conscious platforms

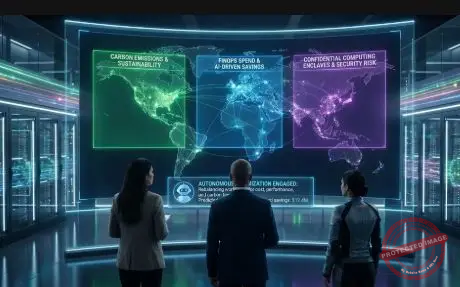

Cloud management platforms are quietly turning into the strategic control planes of digital business. By 2026, they will no longer be just places to tag resources and check invoices; they will orchestrate AI-driven automation, enforce FinOps guardrails, manage edge clusters, embed confidential computing, and shine a bright light on the carbon cost of every workload. For CIOs, CTOs and CFOs, the CMP becomes the cockpit where performance, risk, cost and sustainability decisions intersect.

From multi-cloud chaos to intelligent control planes

Most enterprises are now running workloads across at least two hyperscalers plus some on-premises or colocation capacity. At the same time, AI, analytics and latency-sensitive applications are exploding, driving cloud bills up and making governance harder. Research from FinOps community working groups shows that AI workloads alone can radically change cost structures and forecasting complexity, demanding new practices and tooling to keep spend aligned with value. FinOps Foundation

In response, the new generation of CMPs is built around intelligence, automation, and policy. Instead of static dashboards, they ingest live telemetry from infrastructure, applications, business KPIs, and energy signals. They then use AI and machine learning models to rebalance resources continuously, forecast demand, suggest rightsizing actions, and even simulate the financial and carbon impacts of deployment decisions. Recent industry analysis on AI-driven multi-cloud cost management reports savings of tens of millions of dollars for large enterprises that adopt this automated optimization at scale. JBAI

Six breakthrough CMP technologies defining 2026

By 2026, six technology pillars will reshape what “good” means in a cloud management platform.

The first pillar is AI-powered automation and optimization. Where early CMPs simply exposed cloud metrics, modern platforms feed those metrics into ML models that detect patterns, predict future consumption and automatically apply policies. Analysts tracking AI-driven FinOps note that contemporary tools can correlate cloud bills, resource utilization, and even application performance to recommend or execute rightsizing and scaling decisions in real time. TechTarget

The second pillar is deeply integrated FinOps. Dedicated FinOps platforms already normalize multi-cloud billing data and provide real-time views across AWS, Azure, and Google Cloud, but in 2026, those capabilities are embedded inside CMPs as native services. Reports from FinOps vendors describe how they now bring engineering, finance, and product teams into a shared operating model, with common taxonomies and governance policies that span all providers. Ternary

The third pillar is confidential computing and preemptive cybersecurity. Confidential computing uses hardware-based trusted execution environments to protect data while it is being processed, not only at rest or in transit. All three major hyperscalers now offer trusted enclaves or confidential virtual machines, and CMPs are starting to treat those as first-class resources, automatically steering sensitive workloads into confidential runtimes and validating attestation reports before deployment. AWS in Plain English+3Microsoft Azure+3Decentriq

The fourth pillar is seamless edge computing integration. Kubernetes has already extended its orchestration model to edge clusters, and new tools for managing fleets rather than single clusters are maturing. Research on edge AI and Kubernetes highlights just how challenging it is to deploy and operate hundreds or thousands of edge nodes, which is why CMPs are increasingly adding fleet-aware control planes that unify policy and monitoring across cloud and edge. Plural+2World Wide Technology

The fifth pillar is the rise of industry-specific cloud platforms. Healthcare, financial services, manufacturing, and public sector organizations face stringent regulatory and data residency requirements. Cloud providers and software vendors are responding with verticalized stacks that include prebuilt controls, data models, and compliance reporting. CMPs in 2026 expose these as configurable blueprints that can be deployed across multiple clouds and edge sites, with policy sets tuned to specific regulations and operational patterns.

The sixth pillar is sustainability and green cloud management. Recent academic and industry work warns that data centers may consume close to nine percent of global electricity by 2030 without aggressive efficiency measures. PMC ResearchGate+2 CMPs are reacting by embedding carbon awareness directly into planning and operations, relying on provider tools that estimate emissions, carbon-aware scheduling SDKs, and regional electricity carbon intensity data from hyperscalers such as Google Cloud. Carbon Aware SDK

AI-powered CMPs: from alarms to autonomous optimization

What truly distinguishes 2026-era CMPs is the extent to which AI is woven into their core. Vendors and cloud engineering teams are combining anomaly detection, forecasting, reinforcement learning, and causal analytics to move from “monitor and alert” to “observe, learn, and act.”

Leading cloud computing publications describe AI-powered FinOps as the dual engine of future cloud management, where predictive analytics and optimization engines work alongside human financial and engineering teams. Ilink Digital+2Alphaus Cloud AI models take in historical usage, business cycles, and AI workload training schedules to build demand curves. The CMP then simulates different scenarios, such as moving a GPU-heavy training job to another region or shifting it to a different time of day, showing the trade-offs between performance, cost, and emissions before execution.

This autonomy does not mean humans are removed from the loop. Instead, CMPs allow teams to declare policy intent in business language. An enterprise can state that critical workloads must maintain a specific performance level, noncritical analytics must stay within a fixed budget, and sustainability targets require a maximum emissions threshold per unit of revenue. The AI layer continuously adjusts infrastructure to satisfy those constraints.

FinOps and CMPs converge into a single operating fabric

FinOps started as a discipline separate from day-to-day operations, often implemented in spreadsheets or standalone tools. As cloud complexity has grown, research indicates that this separation is no longer sustainable. FinOps now needs to live where infrastructure decisions are made, inside the CMP. Futuriom

Modern CMPs, therefore, expose FinOps capabilities throughout their interface. When developers request new resources through self-service portals, they immediately see projected monthly and annual spend, historical cost patterns for similar workloads, and whether the request fits within team budgets. When a product owner considers launching a new AI feature, the CMP can estimate the incremental cost of inference traffic at different adoption rates, giving product and finance teams a realistic view of the financial envelope before launch.

FinOps practitioners also gain new telemetry. Instead of trying to reverse engineer costs from invoices after the fact, they can rely on CMP instrumentation that tags workloads correctly, allocates shared services by usage, and tracks commitments against negotiated discounts. Combined with AI forecasting, this transforms FinOps from a reactive practice into a strategic planning function.

Confidential computing and preemptive security as default

Security teams see similar shifts. Confidential computing technologies from Azure, AWS and Google Cloud encrypt data in memory and enforce hardware-level isolation for sensitive workloads. Anjuna Security, Microsoft Azure+3, Duality Technologies+3. Rather than asking developers to choose these options manually, CMPs can mandate them for specific data classifications, ensuring that customer records, regulated financial data, or intellectual property are always processed in trusted execution environments.

At the same time, preemptive cybersecurity capabilities are becoming integral to CMP architectures. AI models trained on logs, network flows, and API traces can detect emerging attack patterns before they escalate. CMPs can then automatically limit blast radius by revoking credentials, reconfiguring network paths, or spinning up clean environments and gracefully failing over. Over time, this reduces the mean time to detect and respond from hours to minutes.

Managing one fabric from cloud to edge

Edge computing complicates cloud management by multiplying the number of sites, hardware profiles, and connectivity conditions. However, it also offers significant benefits for latency, bandwidth optimizatio,n and resilience. Industry blogs and research pieces emphasize that Kubernetes-based approaches, with fleet-level control, are becoming the key mechanism to unify cloud and edge. overcast blog+3Plural+3World Wide Technology By 2026, CMPs treat each edge domain as another endpoint in the same management fabric, even though the underlying infrastructure is very different from centralized hyperscaler regions. Policies for data residency, latency-sensitive services, local AI inference, and security are defined once and compiled into deployment artifacts tailored to each edge location. When a new store, factory, ship or roadside cabinet comes online, it checks in with the CMP, retrieves its desired state, and joins the global observability and FinOps telemetry pool.

Green cloud: CMPs as sustainability copilots

Sustainability is no longer a separate CSR initiative; it is a design and operational constraint. Recent research on sustainable cloud computing and carbon footprint calculators compares the tools offered by hyperscalers and highlights wide differences in precision and usability, but the direction is unmistakable: emissions are becoming a first-class metric. ScitePress+2ResearchGate

CMPs are starting to unify this data into green dashboards that show not only spend per workload but also energy use and emissions intensity. Combined with carbon-aware scheduling libraries, these platforms can move noncritical workloads to times and regions where grid carbon intensity is lower, and surface trade-offs for business stakeholders to approve. ScienceDirect+2Carbon Aware SDK For organizations with aggressive ESG goals, CMPs become the system of record for digital emissions, enabling more accurate reporting and giving product teams direct feedback on the environmental cost of their architectures.

Closing thoughts and looking forward

By 2026, cloud management platforms will no longer be just convenience layers on top of AWS, Azure, or Google Cloud. They are becoming intelligent operating fabrics that link cloud engineering, security, finance, and sustainability into a single continuous feedback loop. AI-powered automation reduces toil and waste, FinOps integration aligns spend with value, confidential computing hardens the most sensitive workloads, edge integration keeps data close to where it is generated, vertical platforms accelerate regulated industries, and embedded sustainability tools transform emissions from an afterthought into a design input.

The next wave of innovation will likely focus on interoperability and openness. As enterprises adopt multiple CMPs or combine native hyperscaler tools with third-party platforms, standards for policy, telemetry, and sustainability metrics will matter more. But the direction is clear: in an era of AI-accelerated demand and tightening ESG expectations, organizations that treat CMPs as strategic control planes rather than auxiliary dashboards will be best positioned to control costs, protect data, and operate responsibly at scale.

References

Cloud cost management and FinOps for the AI era – Futuriom – https://www.futuriom.com/articles/news/cloud-cost-management-and-finops-for-the-ai-era/2024/04

Implement AI-driven cloud cost optimization to reduce waste – TechTarget – https://www.techtarget.com/searchcloudcomputing/tip/Implement-AI-driven-cloud-cost-optimization-to-reduce-waste

AI-Driven Multi-Cloud Cost Management: Strategic Necessity or Hype – Journal of Big Data and Artificial Intelligence (jbai.ai) – https://jbai.ai/index.php/jbai/article/view/32/19

What is confidential computing? Definition and use cases – Decentriq – https://www.decentriq.com/article/what-is-confidential-computing

Green and sustainable cloud computing: Strategies for carbon footprint reduction and energy optimization – International Journal of Software Engineering & Applications (via ResearchGate) – https://www.researchgate.net/publication/392609977_GREEN_AND_SUSTAINABLE_CLOUD_COMPUTING_STRATEGIES_FOR_CARBON_FOOTPRINT_REDUCTION_AND_ENERGY_OPTIMIZATION

Benoit Tremblay, Author, Tech Cost Management, Montreal, Quebec;

Peter Jonathan Wilcheck, Co-Editor, Miami, Florida.

#CloudManagementPlatforms #CloudFinOps #AIDrivenAutomation #MultiCloudStrategy #ConfidentialComputing #EdgeComputing #GreenCloud #SustainableIT #CloudCostOptimization #HybridCloudGovernance

Post Disclaimer

The information provided in our posts or blogs are for educational and informative purposes only. We do not guarantee the accuracy, completeness or suitability of the information. We do not provide financial or investment advice. Readers should always seek professional advice before making any financial or investment decisions based on the information provided in our content. We will not be held responsible for any losses, damages or consequences that may arise from relying on the information provided in our content.

AMD

AMD TMC

TMC IE

IE MSI

MSI NOK

NOK DELL

DELL ECDH26.CME

ECDH26.CME