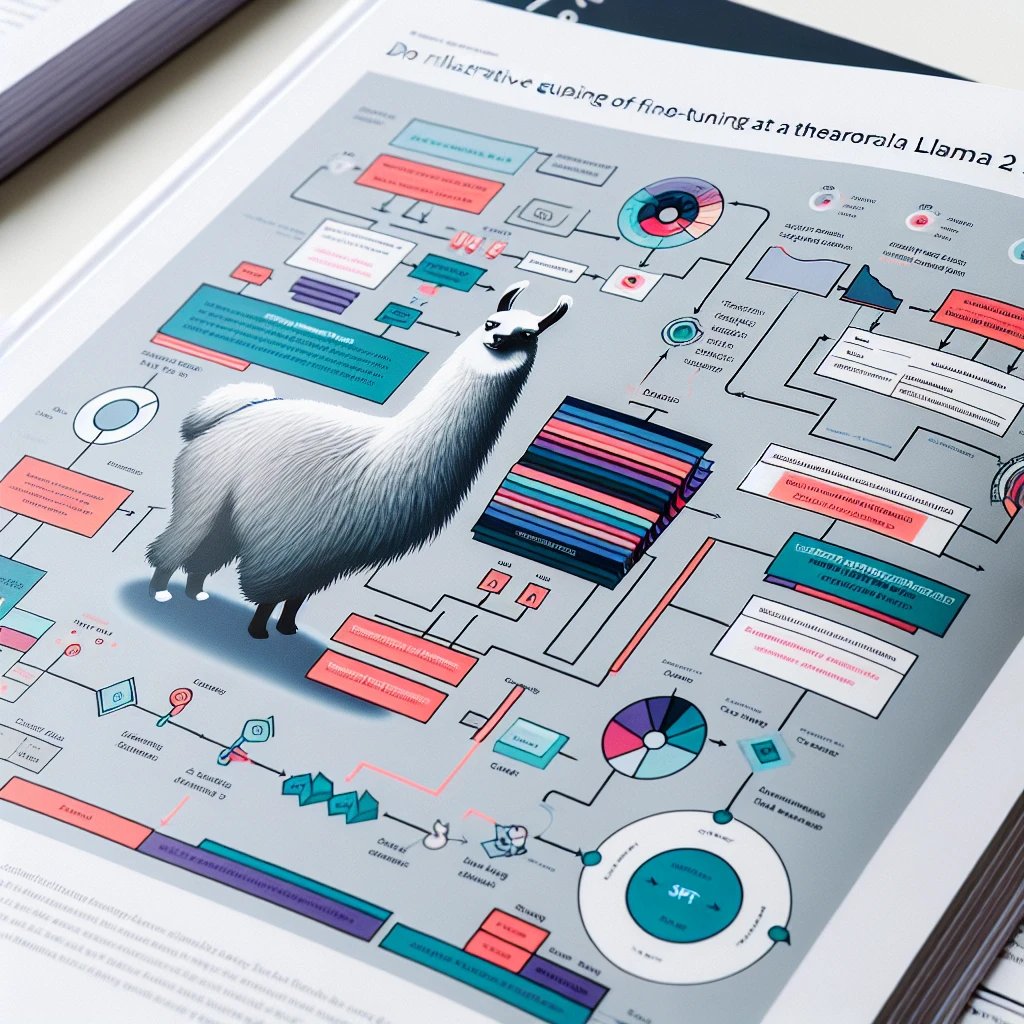

LLaMA 2 has finally arrived, and it lives up to expectations. This new model boasts significant performance enhancements over its predecessor and is now commercially viable. As enthusiasts and developers rush to utilize this powerful tool, many are keen to optimize LLaMA2 for various uses. In this article, we will explore how to fine-tune LLaMA2 using two remarkable methods: SFT (Supervised Fine-Tuning for full parameter) and LORA (Low-rank adaptation).

A Brief Introduction to LLaMA 2

Llama 2 is a set of pretrained and fine-tuned LLMs that range from 7 billion to 70 billion parameters. The model architecture is similar to LLaMA 1, but with an extended context length and the inclusion of Grouped Query Attention (GQA) to enhance inference scalability. GQA is a common practice for autoregressive decoding that caches the key and value pairs for previous tokens in the sequence, thus accelerating attention computation. Other notable aspects include;

- Training on 2 trillion tokens of data

- Extended context length of 4K

- Employment of a new method for multi-turn consistency, Ghost Attention (GAtt)

LLaMA 2 Benchmark

Llama 2 models surpass Llama 1 models. Specifically, Llama 2 70B enhances the results on MMLU and BBH by approximately 5 and 8 points, respectively, compared to Llama 1 65B. Llama 2 7B and 30B models outperform MPT models of the same size in all categories except code benchmarks. For the Falcon models, Llama 2 7B and 34B outdo Falcon 7B and 40B models in all benchmark categories. Additionally, the Llama 2 70B model outperforms all open-source models.

When compared to closed-source LLMs, Llama 2 70B is close to GPT-3.5 on MMLU and GSM8K, but there is a significant gap in coding benchmarks. Llama 2 70B results are on par or superior to PaLM (540B) on almost all benchmarks. However, there is still a substantial performance gap between Llama 2 70B and GPT-4 and PaLM-2-L.

Fine-tuning Llama2 with SFT

In this example, I will walk you through the steps to fine-tune LLaMA 2 using Supervised fine-tuning (SFT). SFT optimizes an LLM in a supervised manner using examples of dialogue data that the model should replicate. The SFT dataset is a collection of prompts and their corresponding responses. SFT datasets can be manually curated by users or generated by other LLMs. To begin the fine-tuning, the first step is to set up the development environment.

Setting Up Development Environment

Install torch and transformers for PyTorch and the Hugging Face Transformers library, respectively, and datasets for loading and processing datasets.

Loading Model and Tokenizer

The script loads the base model and tokenizer for the Llama model from Hugging Face Transformers using the LlamaForCausalLM and LlamaTokenizer classes.

Loading Data

Loading dataset is based on the file format specified by the data_path argument. You can load

Post Disclaimer

The information provided in our posts or blogs are for educational and informative purposes only. We do not guarantee the accuracy, completeness or suitability of the information. We do not provide financial or investment advice. Readers should always seek professional advice before making any financial or investment decisions based on the information provided in our content. We will not be held responsible for any losses, damages or consequences that may arise from relying on the information provided in our content.